You need a hammer! We will come back to this.

Artificial intelligence is advancing at a breakneck pace, defying skeptics who claim progress has plateaued. The recent "strawberry" announcement is a testament to this relentless development. Despite murmurs about scaling laws and supposed limitations, AI models are becoming increasingly sophisticated, tackling challenges deemed insurmountable by some unconvinced even weeks ago.

Consider the long-standing critique that LLMs couldn't accurately count the number of 'r's in "strawberry." Some saw this flaw as systemic due to what they defined as inherent limitations. Now that the problem is solved, we expect the same detractors to unearth the next unsolved issues to keep making their points on the limited utility. Detractors serve a helpful purpose in pointing out the work that needs to be done. However, one must also know problems likely to be solved in one go at some point versus those that will genuinely require other approaches.

What Strawberry beta has solved is more than counting characters. We asked it to create a unique conjecture that is as simple to understand as Fermat’s last theorem, which cannot be proven or disproven with the Math we know and has never been conjectured by anyone. The answer at https://geninnov.ai/s/TETbiO is staggering in its simplicity. https://geninnov.ai/s/qJNcOU shows our attempts at making the model with something new in quantum sciences.

Practically, we made the new model analyze the latest MLCC monthly data from Japan to ask whether the latest monthly jump has real meaning or statistical noise. The analyst community we read has simply plotted the latest data point, cheer-led the acceleration, and attributed it to some global product announcements. The new model, with deep statistical analysis, was scathing and showed, with detailed calculations, how this is more likely white noise. The screenshot below is just a small part of the detailed analysis we can now summon at will.

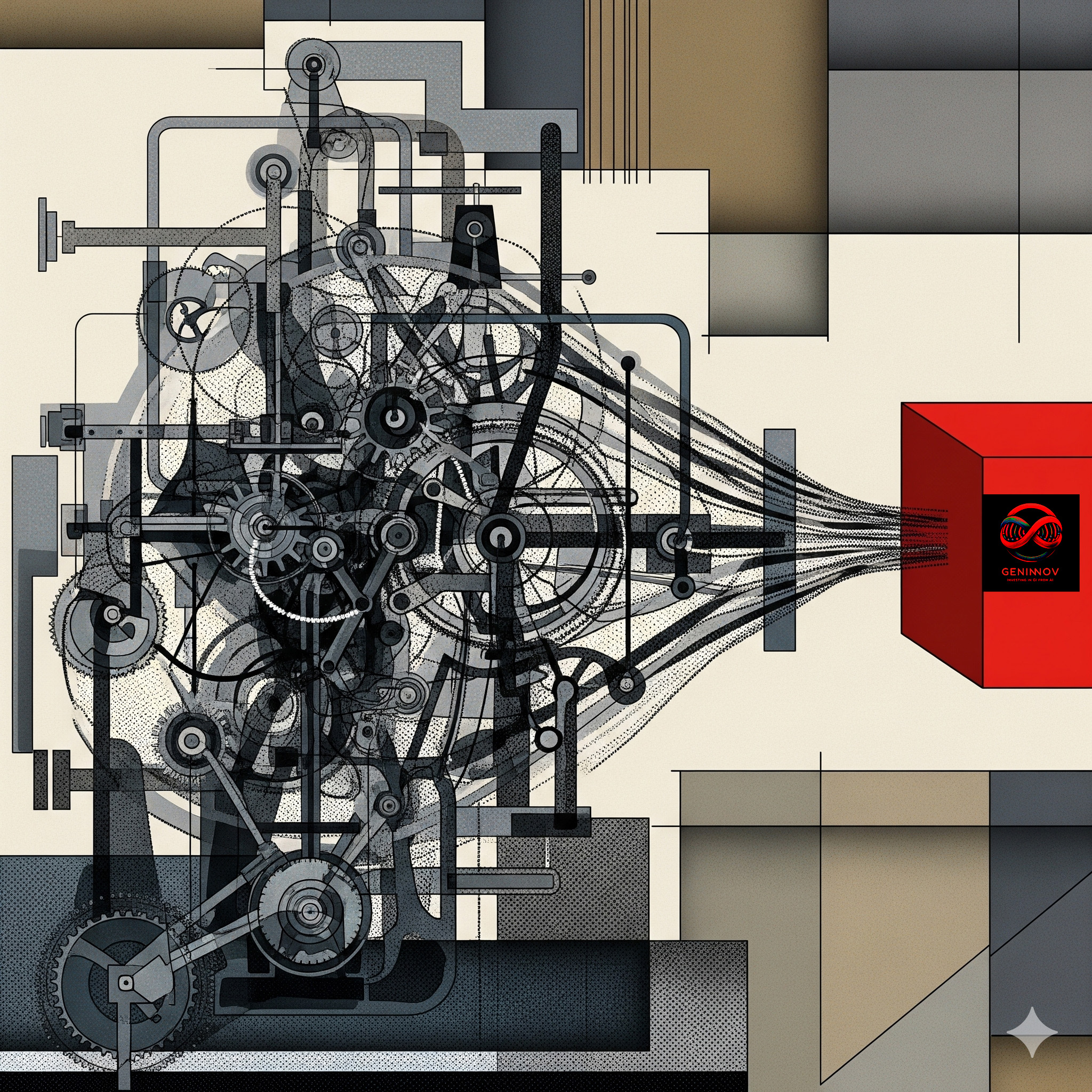

But this brings us to a crucial realization: not every problem requires an LLM. In the same way, you wouldn't use a quantum computer to balance your checkbook. Relying on an LLM for straightforward, deterministic tasks is inefficient and potentially error-prone. For many a task, a simpler tool — a calculator, a GPS, a traditional database — is the more efficient, elegant solution.

This effectively calls for a more nuanced approach to AI development and deployment. Many algorithms designed for particular tasks will continue to outperform generalist approaches; 2+2 questions may be better solved through a calculator than through a Data Centre network performing a trillion-by-trillion matrix multiplication in search of an answer.

Companies like OpenAI, with their laser focus on Artificial General Intelligence (AGI), are pushing the boundaries of what LLMs can achieve. While their pursuit of AGI may lead to LLMs being applied to tasks they aren't yet optimized for, their over-reliance on LLM could also be because they have little in their existing product portfolio to integrate with.

With their rich ecosystems of products and services, companies like Apple, Meta, Microsoft, and Google are uniquely positioned to integrate AI with existing applications. By combining the strengths of LLMs with specialized tools, they can deliver powerful and precise solutions. With all the focus on Strawberry, few noticed Google’s announcement of DataGemma on the same day, significantly pushing the boundaries of these types of integration.

After the announcements last week, we hope those who fear the models can no longer do much more and have begun to stagnate, decay, or worse, feel more reassured.